As a CTO, I’ve watched AI evolve from a niche research field into the backbone of modern software development. Today, you are faced with a big change in how intelligent systems are built. Traditional AI models are being eclipsed by Generative AI and Large Language Models (LLMs), offering new powerful abilities in automation, conversation, and human-machine interaction. Understanding this shift isn’t just an academic exercise—it’s critical to building the next generation of applications.

Traditional AI: Strict, Predictive, and Task-Specific

For years, AI was synonymous with structured, labeled data and carefully designed predictive models. Fraud detection, recommendation engines, and basic chatbots all followed this approach: train a model on historical data, optimize for accuracy, and deploy within well-defined constraints.

Take fraud detection in fintech. A traditional AI model analyzes transaction patterns, flagging anomalies based on predefined risk factors. It works well within structured data environments, but introduce an edge case—a sudden surge in legitimate transactions from a new location—and the model might fail, requiring manual intervention.

The same strictness affected early conversational AI. Traditional chatbots relied on intent-matching and decision trees, failing whenever conversations moved away from their training scripts. Users had to conform to the system’s limitations, not the other way around.

Generative AI: Adaptive, Context-Aware, and Creative

Generative AI flipped this paradigm by learning from unstructured data. Instead of relying on labeled datasets, it absorbs vast amounts of text, images, or audio and generates content dynamically. This is what makes AI agents today fundamentally different from their predecessors—they can understand, summarize, and generate human-like responses in real time.

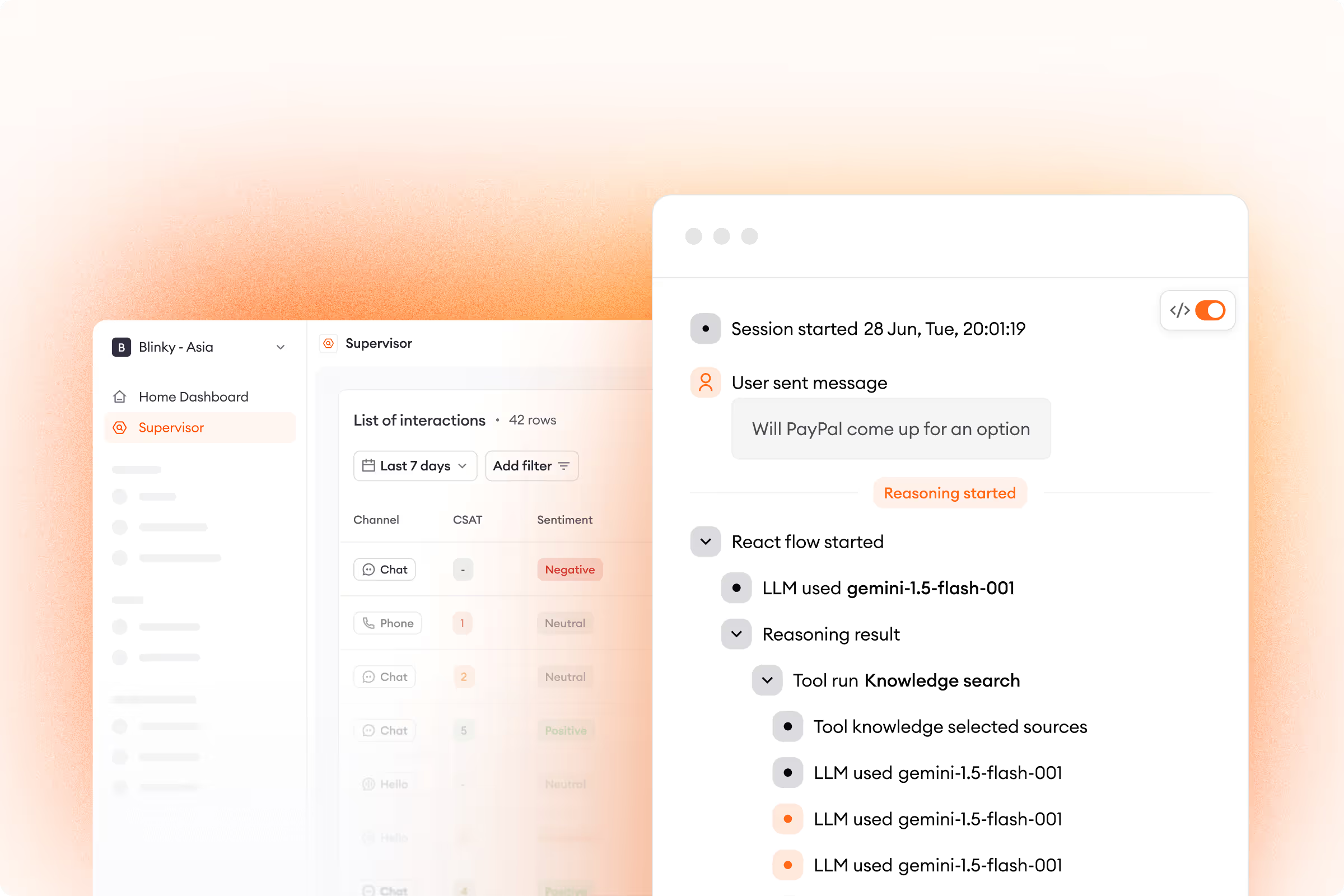

Consider customer support automation. A rule-based chatbot might require users to click through predefined flows to track an order. A Generative AI agent, on the other hand, can process natural language, retrieve order details dynamically, and respond conversationally: "Your order is out for delivery and should arrive by 3 PM." No rigid scripting—just fluid, human-like interaction.

Large Language Models: Scaling Language Understanding

LLMs are the engine behind Generative AI. Unlike traditional AI models that depend on structured training data, LLMs ingest billions of words from diverse sources—books, code repositories, web pages—to develop a deep understanding of language patterns.

Their training is based on self-supervised learning, meaning they predict missing words in text sequences rather than relying on manually labeled datasets. This results in models with hundreds of billions of parameters, enabling them to understand subtleties like sarcasm, context shifts, and intent.

Imagine an AI-driven developer assistant. Traditional code autocompletion tools rely on syntax-based rules. An LLM-powered assistant, however, understands entire programming paradigms, suggesting refactored code, identifying inefficiencies, and even explaining logic in plain English. This is the difference between a static tool and an intelligent collaborator.

Your Dilemma: Choosing the Right Approach

Your challenge is not just understanding these differences but applying them effectively. Traditional AI still excels in structured, high-precision tasks like fraud detection and supply chain optimization. Generative AI, meanwhile, dominates in areas requiring flexibility—customer interactions, content generation, and knowledge retrieval.

LLMs come with trade-offs. Their computational requirements are massive, making fine-tuning and deployment non-trivial. The risk of AI hallucinations—generating plausible but incorrect information—also requires careful mitigation. Guardrails like prompt engineering, domain-specific fine-tuning, and reinforcement learning with human feedback (RLHF) are essential.

Comparison of Traditional AI and Generative AI

When deciding which AI approach to use in your applications, consider the strengths and limitations outlined in this table. If your project requires structured, high-accuracy decision-making, traditional AI may be the better choice. However, if you need adaptive, context-aware solutions that can generate content dynamically, Generative AI is the way forward. Use this comparison as a guide to make informed architectural decisions.

The Future: Generative AI as Your Playground

You are witnessing the decline of traditional interfaces. Menus, forms, and multi-step workflows are being replaced by AI-driven conversations. This shift presents a massive opportunity for you to embrace Generative AI and its capabilities.

With LLMs and Generative AI, you can create systems that don’t just react to inputs but anticipate needs, automate complex workflows, and enhance user experiences through natural interactions. Whether you’re building AI-powered copilots, autonomous customer support agents, or intelligent process automation, the potential is vast.

Generative AI lowers the barrier to software development itself. Code generation models can accelerate your engineering workflows, automating repetitive tasks and suggesting optimized implementations. AI-driven documentation and debugging tools are already reshaping productivity, allowing you to focus on high-level architecture rather than syntax.

At the heart of this transformation is a simple truth: humans think in conversations, not drop-down menus. The companies that master AI-driven conversational interfaces today will define the next era of digital experiences. If you understand and harness Generative AI, you will be the architect of this new paradigm.

For those willing to explore, experiment, and innovate, the future isn’t just about using AI—it’s about building with it. The internet was built around clicks. The future will be built around conversations.

Are you ready?

.avif)

.avif)

.svg)