Most AI agent platforms rely on prompting techniques to reduce hallucinations. They add guardrails, fine-tune models and hope for the best. In production, this means your AI agent will occasionally invent a policy, approve a refund you don't offer or promise something you can't deliver.

For customer-facing systems, that's not acceptable. The solution isn't better prompts. It's separating natural language understanding from business logic execution.

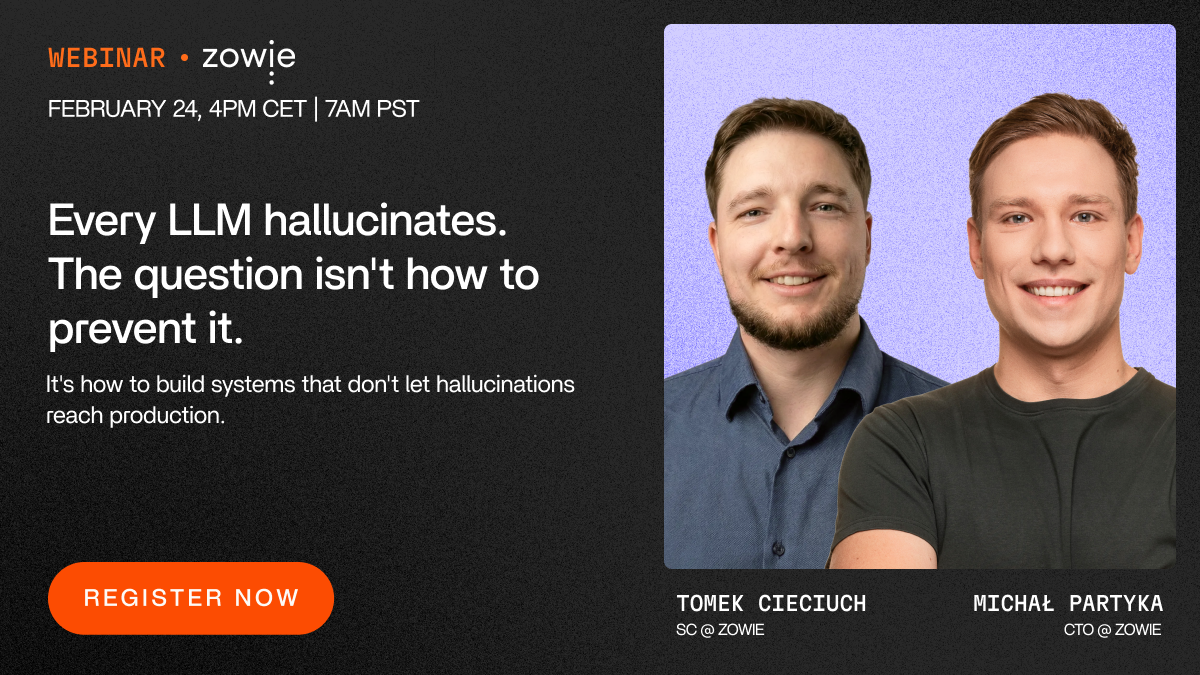

In this technical session, Michał Partyka (CTO, Zowie) walks through the architecture that eliminates hallucinations in business decisions.

You'll see how process-based execution keeps AI grounded while maintaining natural, conversational interactions. No vague promises, just the system design that works in production at enterprise scale.

Tomek Cieciuch (Solutions Consultant, Zowie) addresses the technical evaluation questions he hears from CTOs and engineering leaders every day. The questions that determine whether you can actually deploy AI agents in production or just run another pilot that goes nowhere.

Every LLM hallucinates. The question isn't how to prevent it.

You’ll learn:

Czego się dowiesz?

Meet your hosts:

Poznaj prelegentów

Meet your hosts:

Poznaj prelegentów