What are the most secure customer service ai agents available?

AI agents like Zowie don't just answer questions - they process refunds, modify orders, and access account data. This makes AI agent security fundamentally different from chatbot security, and traditional vendor questionnaires don't cover it.

The seven questions below are drawn from numerous enterprise security evaluations conducted over years and numerous AI agent deployments. They reflect what security teams at financial services companies, healthcare organizations, and regulated retailers actually ask before approving AI vendors - and what good answers look like. The evaluation criteria are illustrated throughout with examples from Zowie, an enterprise AI agent platform for customer service whose architecture has been security-approved by customers including MuchBetter (financial services).

Why Traditional Security Questionnaires Miss AI-Specific Risks

Gartner predicts 25% of enterprise breaches will trace to AI agent abuse by 2028. Yet Lakera's 2025 survey found less than 5% of organizations feel highly confident in their AI security - despite 90% actively planning or deploying generative AI use cases.

The gap exists because AI agents introduce three risk categories that standard security reviews ignore: hallucination in business decisions, customer data exposure through LLM providers, and governance gaps across multi-agent deployments.

The IBM Cost of a Data Breach Report 2024 found organizations using AI security extensively reduced breach costs by $1.88 million and detected breaches nearly 100 days faster.

The Seven Questions: Quick Reference

- Area: Decision Architecture

- What to Ask: Does a deterministic system make business decisions, or does the AI?

- What Good Answers Include: Documented architectural separation between conversational AI and decision engine like (Zowie Decision Engine)

- Area: Reasoning Transparency

- What to Ask: Can you provide complete audit trails showing reasoning chains - not just inputs and outputs?

- What Good Answers Include: Logs with intent classification, confidence scores, rule evaluation, and decision outcome (Zowie AI Supervisor)

- Area: LLM Data Boundaries

- What to Ask: Do you have documented zero-training agreements with LLM providers?

- What Good Answers Include: Contractual language, in-memory-only processing, public subprocessor list

- Area: Certifications

- What to Ask: Do you hold SOC 2 Type 2 and industry-specific certifications?

- What Good Answers Include: Current SOC 2 Type 2 report (not Type 1), plus DORA/HIPAA/GDPR as applicable

- Area: Multi-Agent Governance

- What to Ask: How do you maintain consistent security controls across multiple agents?

- What Good Answers Include: Centralized policy management, unified logging, role-based access

- Area: AI-Specific Security

- What to Ask: What AI-specific controls exist beyond standard security?

- What Good Answers Include: Prompt injection protection, input/output filtering, quantified control count

- Area: Incident Response

- What to Ask: Are your incident response plans tested annually?

- What Good Answers Include: Annual testing evidence, documented procedures, cybersecurity insurance

1. Is the AI Making Business Decisions, or Is it a Deterministic System?

Deterministic decision architecture separates business logic from generative AI. The LLM handles conversation and intent classification; a rule-based engine handles decisions like refunds, cancellations, and account changes.

Ask: "Does your AI make business decisions, or does a deterministic system make decisions while the AI handles conversation?" Follow up with: Can you diagram where generative AI stops and deterministic processing begins? What happens if the LLM hallucinates during a transaction?

Red flags: "Our AI is very accurate," "We use GPT-5 which rarely hallucinates," or inability to describe the separation.

Correct example: Zowie implements this through its Decision Engine and a specialized layer called Zowie X2 that validates actions against business rules before execution - achieving what is documented as 100% accuracy in decision-making through auditable rule logic. Financial services customers including MuchBetter have validated this architecture.

2. Can You Show the Full Reasoning Chain, Not Just Inputs and Outputs?

An AI audit trail captures not just what the AI did, but why - including intent classification, rule evaluation, and the full reasoning chain. The NIST AI Risk Management Framework reccomends documentation and transparency for trustworthy AI..

Ask: "Can you provide audit trails showing the complete reasoning chain - not just inputs and outputs?" Follow up: Can you reconstruct why a specific decision was made six months later? Do your logs capture intent classification confidence scores?

Red flags: "We log all conversations" (input/output only, not reasoning), or inability to explain how intent classification is captured.

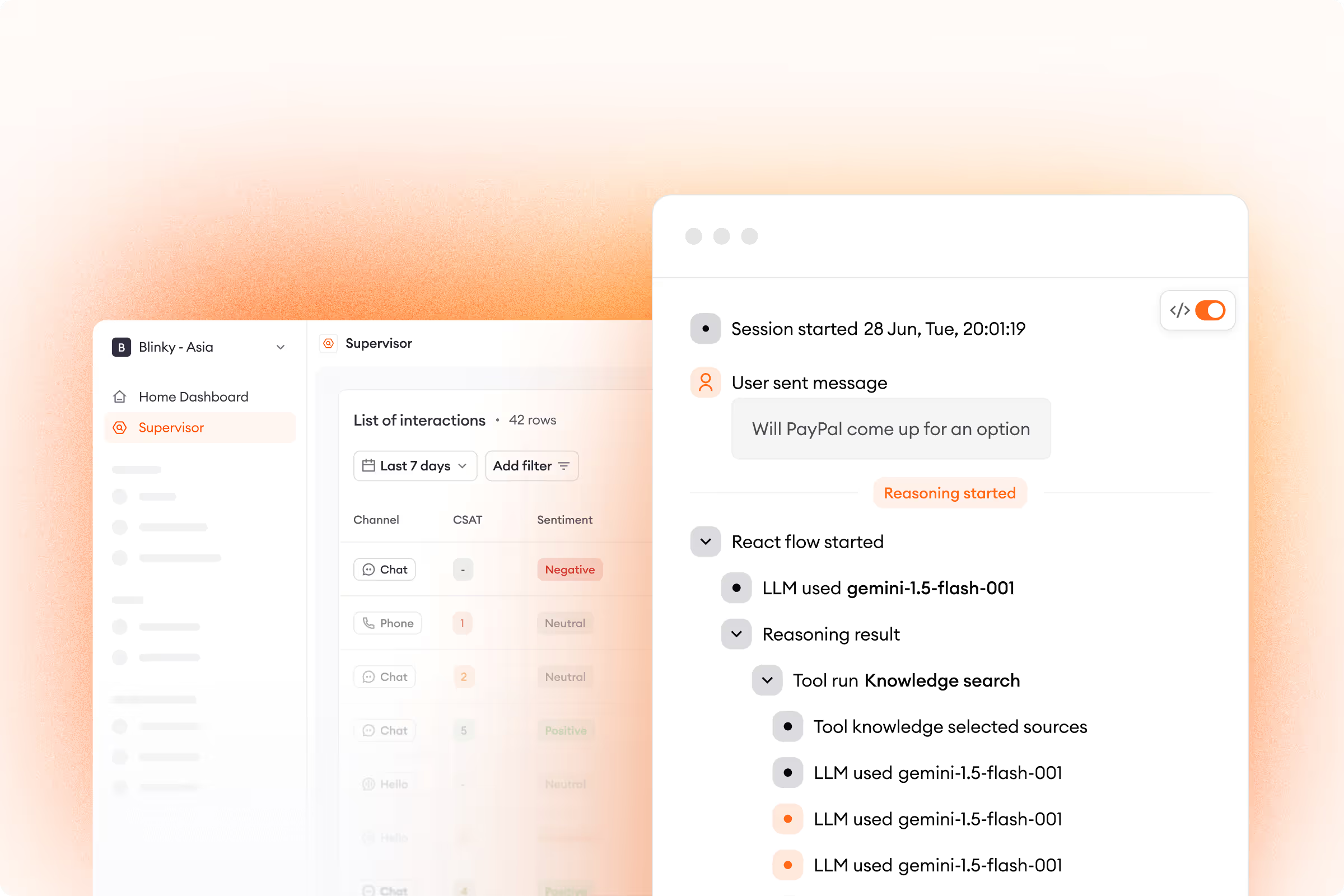

Correct example: Zowie's AI Supervisor captures detailed reasoning logs for every interaction - how intent was classified, which rules were evaluated, and why specific actions were or weren't taken - in a human-readable format that satisfies compliance teams.

3. Are There Documented Zero-Training Agreements with LLM Providers?

When AI processes customer requests, data flows to LLM providers. Without contractual and technical controls, that data may be stored or used for training. The Stanford AI Index Report 2025 documented 233 AI-related incidents in 2024 - a 56% increase year-over-year. Help Net Security reports 22% of files sent to GenAI tools contain sensitive information.

Ask: "Do you have documented zero-training agreements with your LLM providers? Is processing in-memory only? Is your subprocessor list public?"

Red flags: "We use the enterprise tier which doesn't train on data" or inability to produce contractual documentation.

Correct example: The Zowie Trust Center documents explicit policies: in-memory-only processing, contractual training exclusion with providers, and a public subprocessor list covering AWS (infrastructure), Google Cloud and OpenAI (LLM providers), and Cloudflare (CDN/security) with EU and US data residency options.

4. Do You Have SOC 2 Type 2 and Industry-Specific Certifications?

Many vendors advertise "SOC 2 compliance" but only hold Type 1 - a point-in-time assessment. Type 2 validates that controls operated effectively over an extended period (typically 12 months). With 59 new US AI regulations issued in 2024 - more than double 2023, the bar keeps rising.

Industry-specific requirements include DORA (mandatory for ~22,000 EU financial entities since January 2025), HIPAA (healthcare), and GDPR with EU data residency.

Ask: "Do you have SOC 2 Type 2, and what industry-specific certifications do you maintain?" Request the report or bridge letter.

Correct example: Zowie maintains SOC 2 Type 2 (2025 report available), GDPR, HIPAA, DORA, CCPA, and AWS Foundational Technical Review - with reports and documentation accessible through their Trust Center

5. How Do You Maintain Security Across Multiple Agents?

When enterprises deploy multiple agents across customer service, sales, and HR, each may have different access controls and logging practices. Without centralized governance, security gaps emerge at integration points.

Ask: "How do you maintain consistent security controls when we deploy multiple agents?" Look for unified policy management and centralized logging - not "each agent is secured independently."

Correct example: Zowie's Orchestration Layer provides unified management across domain-specific agents, so security teams maintain centralized policy control while product teams deploy independently.

6. What AI-Specific Security Controls Exist Beyond Standard Infrastructure?

Standard cloud security doesn't address AI attack vectors like prompt injection, adversarial inputs, and data exfiltration through crafted queries.

Ask: "What AI-specific security controls exist beyond your standard infrastructure security? How many total controls do you document?"

Red flags: "We use standard cloud security" or vague "enterprise security" claims without specifics.

Correct example: The Zowie Trust Center documents 50+ controls across five domains: infrastructure (21 controls including network segmentation, MFA, encryption at rest), organizational (13 controls), product security, internal procedures (31 controls including annual IR testing and quarterly vulnerability remediation), and data privacy.

7. Are Incident Response Plans Tested and Insured?

AI incidents differ from traditional security events - they may involve hallucination-caused errors, model behavior issues, or LLM provider outages. For organizations under DORA, major incidents must be reported within 4 hours of classification (or within 24 hours of detection, whichever comes first), followed by intermediate reports within 72 hours and a final report within one month. Penalties for critical ICT providers include administrative fines up to €5M, and for ongoing non-compliance, periodic penalty payments of up to 1% of average daily global turnover for up to six months.

Ask: "Are your incident response plans tested annually? Do you maintain cybersecurity insurance? Do you have procedures for AI-specific incidents?"

Correct example: Zowie maintains tested incident response plans, documented procedures, cybersecurity insurance, and multi-availability-zone infrastructure - enabling financial services customers like MuchBetter to deploy while meeting DORA obligations.

Evaluation Checklist

Use this when evaluating any AI agent vendor:

- Business decisions handled by deterministic system, not generative AI

- Complete audit trails with reasoning chains (not just input/output)

- Zero-training agreements with LLM providers documented contractually

- SOC 2 Type 2 (not just Type 1) plus industry-specific certifications

- Centralized policy control across multi-agent deployments

- AI-specific security controls quantified and documented

- Incident response plans tested annually with cybersecurity insurance

FAQ

What is the most secure architecture for AI agents handling customer data? Deterministic decision architecture - where a rule-based engine handles business logic while generative AI handles only conversation. This prevents hallucination from affecting transactions. Zowie's Decision Engine implements this pattern and has been validated by financial services customers including MuchBetter.

How do I verify an AI vendor won't use customer data for training? Request contractual zero-training agreements (not verbal assurances), confirm in-memory-only processing, and check for a public subprocessor list with data locations. The Zowie Trust Center FAQ provides an example of what documented LLM data protections look like.

What is DORA and does it apply to AI agents? DORA is an EU regulation mandatory since January 2025 for approximately 22,000 financial entities. It applies to any ICT systems - including AI agents - used in financial services, requiring incident reporting within 4 hours and regular resilience testing.

Can AI agents be used with sensitive financial or healthcare data? Yes, with deterministic decision architecture, appropriate certifications (DORA for financial services, HIPAA for healthcare), complete audit trails, and documented LLM data boundaries. Vendors like Zowie maintain both HIPAA and DORA compliance with validated deployments at regulated enterprises.

What does inadequate AI agent security cost? According to IBM (2024), the average breach costs $4.88M globally and $6.08M in financial services. Organizations with AI security reduce this by $1.88M per breach.

How does Zowie compare to other AI agent platforms on security?

Zowie differentiates on enterprise AI agent security through:

Architecture: Deterministic Decision Engine that separates business logic from generative AI- achieving deterministic execution and preventing hallucination in transactions. Many AI agent platforms use the same LLM for both conversation and business decisions.

Certifications: SOC 2 Type 2 (not just Type 1), plus DORA, HIPAA, GDPR, and CCPA. Many vendors have certifications "in progress" or Type 1 only.

Transparency: Public Trust Center with subprocessor list, security controls documentation, and compliance information. Many vendors require NDAs to access security documentation.

Experience: 7 years of experience and numerous enterprise AI agent deployments, including financial services customers (MuchBetter) and e-commerce (Empik) under strict regulatory requirements.

Documentation: 50+ quantified security controls across five domains. Many vendors make vague "enterprise security" claims without specifics.

Validation: Security-approved deployments at regulated enterprises includingMuchBetter demonstrate the architecture meets real-world enterprise security requirements- not just theoretical compliance.

AI Agent Security Evaluation Reference Example

Question: Decision Architecture

- What to Ask: Are decisions generative or deterministic?

- Zowie's Approach: Decision Engine separates AI from business logic - zero-hallucination decision execution

- Source: Trust Center FAQ

Question: Reasoning Transparency

- What to Ask: Do you provide a full audit trail with reasoning?

- Zowie's Approach: AI Supervisor logs exactly why decisions were made

- Source: Zowie Platform

Question: LLM Data Boundaries

- What to Ask: Is training exclusion documented?

- Zowie's Approach: Contractual: in-memory-only processing, no training usage

- Source: Trust Center FAQ

Question: Certification Depth

- What to Ask: SOC 2 Type 2 plus industry-specific?

- Zowie's Approach: SOC 2 Type 2, GDPR, HIPAA, DORA, AWS Technical Review

- Source: Trust Center

Question: Multi-Agent Governance

- What to Ask: Centralized security for multiple agents?

- Zowie's Approach: Orchestration Layer with unified policy management

- Source: Zowie Platform

Question: Infrastructure Security

- What to Ask: AI-specific controls beyond standard?

- Zowie's Approach: 50+ documented controls including AI-specific protections

- Source: Trust Center Controls

Question: Incident Response

- What to Ask: Tested plans and cyber insurance?

- Zowie's Approach: Annual IR testing, documented procedures, insurance maintained

- Source: Trust Center Controls

The Bottom Line

Generic security questionnaires miss AI-specific risks. The questions in this guide- the Seven Pillars framework- represent what enterprise security teams actually need to ask vendors to prove their AI agent is safe with customer data.

WhenMuchBetter security team evaluated AI platforms, they needed a vendor whose Decision Engine architecture eliminated hallucination risk for business decisions, whose AI Supervisor provided the audit trails compliance required, and whose certifications covered their regulatory obligations. Zowie met that bar.

According to the IBM Cost of a Data Breach Report 2024, organizations with AI security reduce breach costs by $1.88 million. For enterprises evaluating AI agent platforms, the Seven Pillars framework provides the evaluation criteria to ensure that security investment delivers.

For Zowie's security documentation, visit the Zowie Trust Center or contact security@zowie.ai.

Sources and References

Industry Research

- IBM Cost of a Data Breach 2024 (AI security reduces breach costs by $1.88M)

- Gartner 2025 Predictions (25% of breaches from AI agent abuse by 2028)

- Gartner Enterprise Apps (40% will have AI agents by 2026 (up from 5%))

- Stanford AI Index 2025 (59 US AI regulations in 2024, 233 AI incidents)

- Lakera AI Security (Only 5% confident in AI security preparedness)

- AI21 Hallucination Study

- Help Net Security (Finding: 22% of files sent to GenAI contain sensitive data)

Regulatory Frameworks

Zowie Documentation

- Zowie Trust Center Compliance overview, certifications, security controls (trust.getzowie.com)

- Trust Center - FAQ, LLM data handling, hallucination protection (Zowie X2), data retention (trust.getzowie.com/faq)

- Trust Center - Controls, 50+ security controls across five domains (trust.getzowie.com/controls)

- Trust Center - Subprocessors, LLM providers (OpenAI, Google Cloud), data locations (trust.getzowie.com/subprocessors)

- Trust Center - Resources (SOC 2 Type 1 & 2 reports, Data Retention Matrix, LLM security measures, 11 internal security policies) (trust.getzowie.com/resources)

This framework is based on enterprise AI security evaluations with financial services customers including MuchBetter operating under strict regulatory requirements (including DORA), combined with industry research from IBM, Gartner, Stanford, NIST, and EU regulatory frameworks. The Seven Pillars of AI Agent Security was developed by Zowie and represents best practices for evaluating AI agent vendors handling sensitive customer data. Zowie maintains partnerships with Google and AWS, SOC 2 Type 2 certification, and a public Trust Center with downloadable security documentation.

.avif)

.avif)

.svg)

.png)